What Is Prompt Engineering?

Prompt engineering refers to the art and science of crafting inputs (prompts) to large language models (LLMs) like ChatGPT so that they produce desired, high-quality outputs. Since LLMs behave based on how they are prompted, the structure, specificity, and context of the prompt have a huge impact on what you get back.

Findings from a study titled The Prompt Report from Cornell University show that there are dozens of identified techniques and best practices for prompting that affect effectiveness, reliability, and usability.

Why Advanced Techniques Matter

Basic prompting (just stating a question or command) works reasonably well for many tasks. But when you want higher accuracy, creative or domain-specific output, or reliable reasoning (e.g. legal, academic, technical tasks), more advanced techniques can make the difference. They help avoid common problems:

- Hallucinations (made-up or incorrect content)

- Vague or off-topic responses

- Too generic or superficial outputs

Inconsistent style or format

Key Advanced Techniques

Here are several advanced prompt engineering techniques that improve output quality with ChatGPT or similar LLMs.

1- Chain-of-Thought Prompting (CoT) & Zero-Shot Chain-of-Thought

One simple trick to get better answers from AI is to ask it to “think out loud” before giving the final answer. For example, instead of just asking “What is 27 × 43?”, you add “Let us think step by step.” This makes the model explain its reasoning, which usually gives more accurate results on multi-step problems like math or logic. Probably the strongest example is Large Language Models are Zero-Shot Reasoners, where simply adding “Let’s think step by step” boosted performance on benchmarks. Researchers call this Zero-Shot Chain-of-Thought because you do not give the AI any examples, you just tell it to reason step by step.

There is also an even smarter method called “Plan-and-Solve”. Instead of diving straight into the answer, you first tell the model to make a plan (like breaking a big problem into smaller parts). Then, in the next step, you ask it to solve each part of the plan one by one. This approach reduces mistakes, because the model is less likely to skip important steps.

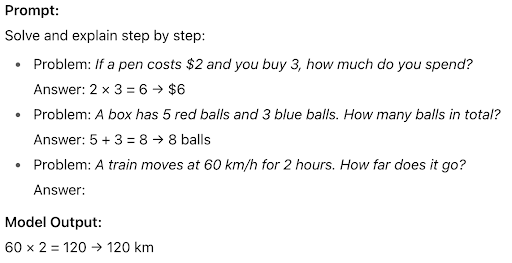

2- Few-Shot Prompting

Few-Shot prompting is about providing a few examples of input-output pairs so the model has a pattern to follow. This works well when you want a specific format or style of output.

3- Prompt Chaining

Breaking down complex tasks into smaller ones. Instead of asking ChatGPT to produce a polished final report in one go, you might first ask for an outline, then request sections of content, then ask for refinements. This reduces cognitive load and usually yields higher quality.

4- Role Prompting / Persona Framing

Assigning a role or persona to the model helps adjust tone, style, terminology, and level of detail. This is a powerful control mechanism. An example of such a prompt is:

“Picture yourself as a doctor in the consultation room. A patient asks, ‘How does this AI tool actually help with my diagnosis?’ How would you walk them through the process step by step?”

5- Retrieval-Augmented Generation (RAG)

For tasks that require real time or factual information, RAG means (“retrieval-augmented generation”) to integrate external knowledge bases or documents. This ensures the model has current and accurate data to pull from rather than relying solely on its pretraining. It is ideal for generating up-to-date market reports or fact-checked responses.

Watch this video to learn the step by step functions to implementing RAG

6- Iterative Prompting

After getting an initial response, ask the model to critique or refine it (point out weaknesses, expand, correct). This loop can help produce more polished outputs. Also helpful when the first response is incomplete or has gaps.

For instance: You assign an essay, the AI produces a draft, you notice it lacks examples, the AI revises with examples, the process repeats until you reach a polished, high-quality essay.

7- Prompt Pattern Catalogs

Prompt pattern catalogs are like ready-made templates you can reuse. Research shows that these patterns help solve common problems faster, just like design patterns in software. They give you proven structures for writing prompts so you don’t always have to start from scratch.

8- Specify Output Format & Constraints

When writing prompts, be very clear about the format you want. That means spelling out details like bullet points, tables, word limits, tone, or audience. These constraints guide the AI so it does not drift off-topic or get too wordy. For example, if you ask for a 150-word summary, or exactly 3 pros and 3 cons, the model is more likely to deliver the structure you expect.

Common Pitfalls & How to Avoid Them

- Vague terms or conflicting instructions confuse the model.

- Trying to include too much in a single prompt: many requirements, constraints, style requests could cause errors or degrade performance.

- Not supplying enough background context leads to generic or wrong context.

- Ignoring Model Limits: Token limits, knowledge cutoff dates, or model bias mean you need to adapt prompts accordingly.

Using the first prompt you try without testing variations or refining. Often small tweaks yield big improvements.

Applying These Techniques with ChatGPT

Here are some practical tips when you’re using ChatGPT:

- Start prompts with a role or context: “You are an expert in X…”, “As a teacher, explain to a beginning student…”

- Use “step-by-step” instructions for reasoning tasks. “List steps, then conclude.”

- When you care about accuracy, ask ChatGPT to generate and verify sources, or say “If you are not sure, say ‘I do not know’”.

- Provide examples of what you want. If you want a summary in bullet points with 3 items, show one.

- Use follow-ups to refine: Ask for shorter, more detailed, more formal, or more simplified versions.

- For domain-specific tasks (legal, medical, technical, etc.), combine RAG with domain knowledge or specialized datasets

Conclusion

Mastering prompt engineering means going beyond giving simple commands. It is about writing prompts that are structured, detailed, and aware of the context. Using the right techniques can make ChatGPT’s answers more accurate, clear, and useful. To do this, always set clear limits for the output, give enough context and examples, and keep refining until you get what you want. Things are also shifting toward ethics. For example, Anthropic’s recent guide suggests letting the model say “I don’t know” and verifying claims which makes it more reliable. As AI improves, combining these techniques with domain knowledge, and ethical rules will be even more important.